Getting your Trinity Audio player ready...

Hundreds of Nazi accounts spread hate speech on the social video app TikTok; not only does the platform fail to remove these videos and accounts, but its algorithm also amplifies their reach and influence.

This revelation comes from a comprehensive report by ISD, the Institute for Strategic Dialogue, published on Tuesday. ISD is an independent global non-profit organization based in London, dedicated to combating polarization, extremism and disinformation. Notable donors include the Melinda and Bill Gates Foundation.

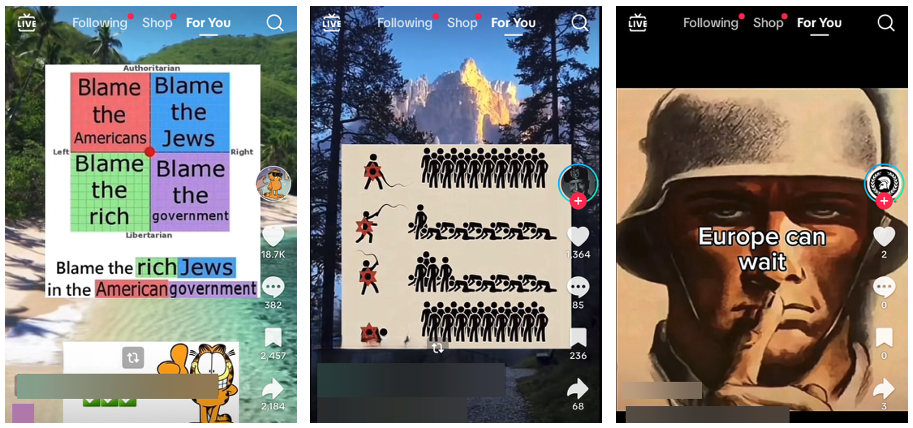

According to the report, TikTok hosts hundreds of accounts that openly support Nazism and use the platform to promote their ideology and propaganda. The pro-Nazi content generated by these accounts has garnered tens of millions of views on the app. It includes videos denying the Holocaust, glorifying Hitler, Nazi Germany and Nazism as a contemporary solution, supporting mass massacres by white supremacists, and even footage or reenactments of the massacres.

ISD researchers claim that TikTok fails to remove these hate videos and accounts even when their content is reported by users. They reported 50 accounts to the network for violating TikTok's "community guidelines" regarding hate ideologies, promoting violence, Holocaust denial and other rules – but all remained active and were returned by the company with the designation "no violation." These accounts collectively garnered more than 6.2 million views, including those that explicitly promoted Nazism or displayed hate speech, including Nazi symbols in their profile pictures, as well as usernames, nicknames or descriptions derived from Nazi imagery.

According to the report, the spread of accounts promoting Nazism is automatically amplified on TikTok thanks to the platform's algorithms: TikTok quickly recommends such content to users who frequently consume far-right hate content.

Coordinated networks move content

The research claims that the video and account distributors operate on several social networks simultaneously and coordinate with each other by sharing videos, images and downloadable sounds. If on TikTok the researchers managed to uncover at least 200 such networks - on the Telegram social network, they were able to compile a list of more than 100 pro-Nazi channels.. To track the accounts, ISD researchers used, among other things, dummy accounts and monitored their automatic dissemination.

The researchers identified several prominent trends in TikTok's hate content. The first is the use of generative artificial intelligence, often to create caricatures and dehumanize non-white groups, portraying them as violent or otherwise threatening to white communities. The effort is primarily to modernize Nazi propaganda. One of the popular videos is an AI-based translation of a speech by Adolf Hitler. Another video promotes the neo-Nazi documentary "Europe: The Last Battle."

There is also sophisticated use of sounds and audio through fascist songs and melodies, which serve as encrypted references, to evade TikTok's content-filtering algorithms. The music usually does not violate the network's rules but is often combined with memes and images promoting Nazism, fascism and white supremacy.

For example, a TikTok search for a song played by the murderer during the mosque massacre in Christchurch, New Zealand in 2019 after killing 51 Muslim worshippers yielded 1,200 videos. Seven out of the top 10 videos celebrated the massacre and the motive behind it and glorified the murderer, even reenacting the massacre in a video game. These seven videos alone garnered a collective 1.5 million views, and there are likely hundreds of similar videos freely circulating on the platform.

Use of codes and hints

Among the tactics identified by the researchers is communication in codes between content distributors to evade detection. These include emojis, abbreviations, numbers and encoded images, which indeed make it difficult for TikTok to detect such extremist accounts. Another common tactic includes using innocent or nostalgic images in videos, which quickly transition to extreme content.

While the campaign includes efforts to "wake people up" on other platforms, the central effort is the dissemination of propaganda on TikTok, mainly due to its broad reach. Even when users are blocked, they can often quickly recreate their accounts. According to ISD, the platform is also used for open recruitment by several fascist or far-right organizations in the real world.

After identifying and documenting 200 accounts, ISD reported 50 such accounts to TikTok to assess how, if at all, they would filter them. The platform found no violations in them, and all accounts remained active the next day. However, after two weeks, 15 of them were blocked, and after a month almost half were blocked. This means that TikTok does not immediately take down accounts promoting hate speech and pro-Nazi propaganda. And while it occasionally removes such accounts, it often happens after weeks and months during which they manage to accumulate significant views, when damage has already been done.

ISD also has branches in Washington, Berlin, Amman, Nairobi and Paris. The institute has previously researched, among other things, the spread of misinformation and disinformation related to climate change, public health, election integrity and more. The institute collaborates with governments and works on funded projects with tech companies and large organizations.

A TikTok spokesperson said earlier this week in response: "There is no place on TikTok for hate speech or ideologies and organizations that encourage hatred. We remove over 98% of this type of content before it is reported to us on the app. We work with experts to proactively identify the development of such trends and strengthen our defenses against hate ideologies and groups."