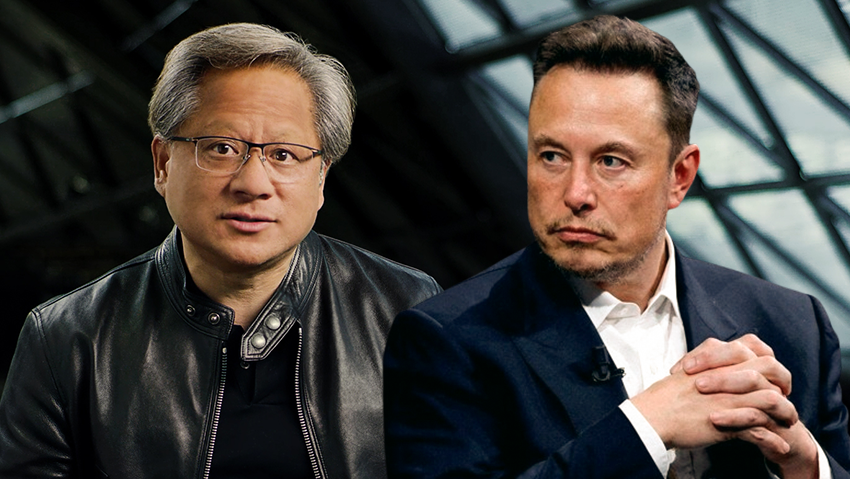

If anyone needed an explanation for the meteoric rise in Nvidia's value, the headlines from the past few days provide a clear answer: Elon Musk, owner of the AI firm xAI, has redirected thousands of Nvidia AI chips from his automotive company Tesla to his latest Generative AI venture.

According to a report by CNBC last week, Musk decided that 12,000 H100 AI processing units (GPUs) intended for Tesla will be rerouted to xAI. This purchase is valued at approximately $500 million. The shipments to Tesla have been postponed to January and June of next year.

Critics of this audacious move are quick to remind Musk of his previous statement that Tesla is on its way to becoming a "leader in AI and robotics," through the purchase of 85,000 chips, with a total investment of $10 billion. This move casts a notable shadow over that statement.

Not one to take criticism lying down, Musk released a series of tweets, the most notable of which stated that Tesla, his pride and joy, currently cannot utilize these chips anyway. Thus, instead of letting them gather dust in warehouses, he decided they would serve the urgent need to advance the chatbot Grok, his other pride and joy, in competition with OpenAI's GPT-4 and its more advanced iterations. Musk had previously declared his intention to build the world's largest GPU cluster, essentially a data center and supercomputer, in North Dakota. This center will replace the servers of Amazon and Oracle, which xAI currently uses.

Musk's preference for xAI over Tesla puts him in a notable conflict of interest, as frustrated Tesla investors are forced to watch as Musk places his near-undivided attention and resources into the Generative AI arena, which he aims to dominate. This move is a clear indication of Nvidia's importance in this emerging tech landscape, as every single chip the company manufactures warrants a strenuous struggle to acquire and utilize.

From NVIDIA's perspective, everyone is more than welcome to play, as long as the competition's infrastructure is made of her GPU chips. This situation is truly an unexplained anomaly: almost two years into the titans' AI war, and NVIDIA's platform is still the undisputed hardware leader in the field, with a market share of over 70% in AI chips. This boosts its value. NVIDIA's strategy to maintain its position is based on a software platform provided with the chips to customers, allowing them to easily develop AI applications. It essentially provides the software that powers the chips, which is not compatible with other software platforms. It's also incompatible with other chips, so it's impossible to develop Intel chips with its software. This is precisely Apple's strategy but applied to the AI development world.

A new chip every year

NVIDIA is the third most valuable company in the world, reaching a $2.7 trillion evaluation last month. Since the beginning of the year, its stock has surged by 132%. Over the past five years, NVIDIA's stock has risen by 3,174%. The company's CEO, Jensen Huang, said last month that the company struggles to keep up with demand. "Customers are consuming all the existing GPU chips," he said in an investor call. Following his remarks, the company's stock surged again and its value grew by an additional $350 billion.

Analysts predict that if this growth rate continues, it won't be long before NVIDIA surpasses Apple in market value. Apple's current value is close to $3 trillion. The value of Microsoft, the top-ranked company, is around $3.1 trillion, meaning that with the same momentum, NVIDIA could become the most valuable company in the world. Some say that the planned stock split (which will increase its accessibility to the general public), scheduled to occur at the end of next week, will be the necessary boost.

This is all happening just two days after the company unveiled the next generation of AI chips at the Computex conference in Taipei, Taiwan. In the conference's keynote speech, Huang presented the new AI platform – Rubin – which will replace the current Blackwell platform in 2026. This is slightly unusual given that the new Blackwell was launched only in March this year, and NVIDIA is currently delivering its first shipments.

According to Huang, we can all expect an accelerated pace of introducing new chips year after year. This puts established competitors like Intel, or the additional competitor AMD, in a difficult position: will they be able to pose any challenge to the behemoth? One analyst, Richard Windsor, said: "It's clear NVIDIA intends to maintain its dominance for as long as possible. Currently, no challenges can be seen on the horizon."

The main part of Huang's presentation focused on the expansion of Blackwell's use. NVIDIA showcased a series of collaborations with companies developing supercomputer systems for artificial intelligence. Alongside these were systems with a single GPU chip, graphic processors and combined CPU and GPU systems, both air-cooled and liquid-cooled. And everything is based on the Blackwell platform. In the vision of the AI-based personal computer, NVIDIA intends to take a central place.

Will NVIDIA continue to dominate the field of AI chips without growing competition? Huang will likely be the first to say that competition will intensify at some stage. IBM once dominated the computer world with an iron fist until competitors overcame it. NVIDIA's main competitors are Intel and AMD, each of which has just launched the next generation of their AI chips. Intel has symbolized missed opportunities in recent years, first with smartphone chips and then with AI chips. The acquisition of the Israeli Habana Labs was intended to boost Intel's ability to present competing chips to NVIDIA's GPUs.

Playing catch up

Competitors try to close the gap – not always successfully At the Taiwan conference, AMD CEO Lisa Su presented the company's new AI processors, including the MI325X chip, which will be available in the fourth quarter of 2024. The day after, Intel CEO Pat Gelsinger announced the sixth generation of Intel's Xeon chips for data centers and the Gaudi 3 AI chips – from Habana Labs. He noted that the Gaudi capabilities are equivalent to NVIDIA's H100, at one-third of the price. Will this tempt customers to escape NVIDIA's hot, locked and expensive embrace? Doubtful, mainly because switching to Intel's platform is a strategic and costly change that no one is interested in during the height of competition.

A new competition is emerging in the developing market of AI-integrated personal computing. Until now, desktops and laptops were based solely on CPU chips, with graphic chips (GPU) only assisting them in performing computationally intensive tasks. Now, NVIDIA intends to reverse the equation and place its GPU at the center of things. At this stage, through collaborations with Asus and MSI. Facing it is Microsoft, which recently introduced a series of AI-integrated Copilot computers and tablets, based on Qualcomm's new chip, which poses a threat to both Intel and AMD, akin to NVIDIA.

Microsoft is at the center of another competition against NVIDIA: a competition against its cloud customers. Microsoft and Meta, as well as Google, Amazon and Oracle, which operate the world's largest cloud services, are NVIDIA's biggest customers. These companies are forced to pay NVIDIA $30,000 for each H100 server, and they consume tens of thousands of them. Microsoft and Meta alone paid NVIDIA about $9 billion in 2023 for these chips. The other three paid an additional $4.5 billion. A series of Chinese companies like Tencent, Baidu, Alibaba and others paid several more billions.

These companies have long understood they must develop their alternatives. Some develop them in Israel, with the aim of reducing dependence on NVIDIA. If successful, it will significantly reduce NVIDIA's revenues. Will it succeed? On the fringes maybe yes, at their core businesses, NVIDIA's customers will need it for many years to come.

But what about NVIDIA's biggest customers? Large AI service companies like OpenAI with Microsoft, Google with DeepMind, Amazon with Anthropic and Musk's xAI, among many others. These are required to spend vast sums on buying chips from NVIDIA and also compete with each other for a quantity of chips, since there aren't enough to go around.

One of the main initiatives from this direction is from Sam Altman, CEO of OpenAI, who seeks to establish a new company to manufacture AI chips (temporary name "Tiger") with a total investment of up to $7 trillion. This amount, which may seem imaginary, symbolizes the extent of unsatisfied or unreasonably expensive demand currently in the AI chip industry. Altman expressed that constraints in the chip infrastructure field limit OpenAI's growth. He even tweeted on X that competition in the field is essential. At the beginning of the year, he visited South Korea and met with senior chip manufacturing executives at Samsung and SK Hynix, and some of his other visits around the world are dedicated to raising investors for the venture.

Recently, we heard about another competition against NVIDIA, initiated by SoftBank's CEO Masayoshi Son. Reuters reported that he seeks to raise about $100 billion and establish a venture called "Izangi" to compete with NVIDIA in AI chip manufacturing. $30 billion will be invested by SoftBank, and $70 billion will come from other investors, some from the Middle East. SoftBank is the controlling owner of the British chip manufacturer Arm, which currently focuses mainly on developing CPU chips.

If some of these attempts succeed, they might somewhat restrain NVIDIA's forward rush, which crushes everything in its path. Will this result in a decrease in AI usage costs for consumers? That's the trillion-dollar question.