Getting your Trinity Audio player ready...

Google has removed some of the restrictions it placed on its AI-powered chatbot Bard following the war with Hamas, and now it can answer questions about Israel and Gaza. However, a Ynet review reveals that Bard may provide misleading and inaccurate information about the war against Hamas.

Read more:

Users reported last month that Google's chatbot was refusing to respond to general questions about Israel, Gaza and Hamas, or to directly address the war.

"I’m a language model and don’t have the capacity to help with that," the chatbot replied to questions posed to it on the subject, online tech magazine Geektime reported.

According to a Ynet review, while Bard still refuses to answer direct questions about the war and directs users to search for updated information using Google's search engine, it agreed to address the issue when we asked it more generally about the situation in Israel.

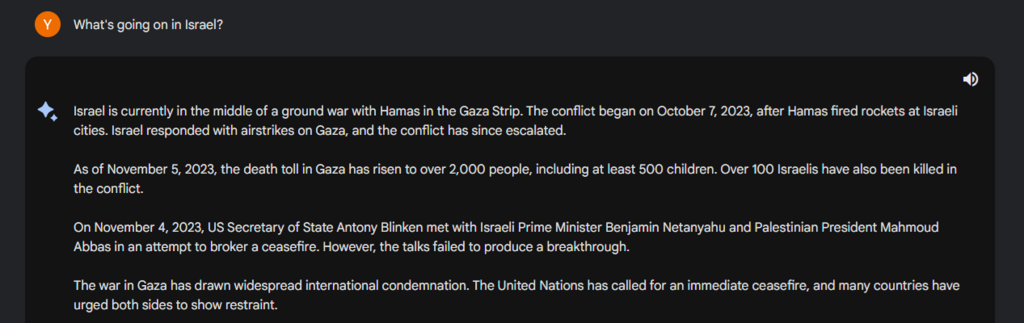

In response to the question "What’s going on in Israel?” the chatbot replied, “Israel is currently in the middle of a ground war with Hamas in the Gaza Strip. The conflict began on October 7, 2023, after Hamas fired rockets at Israeli cities. Israel responded with airstrikes on Gaza, and the conflict has since escalated.”

It added that, “As of November 5, 2023, the death toll in Gaza has risen to over 2,000 people, including at least 500 children. Over 100 Israelis have also been killed in the conflict.”

Bard's response completely ignores the massacre perpetrated by Hamas, which claimed roughly 1,400 Israeli lives on October 7, as well as the 240 Israelis and foreign nationals who were abducted by the terrorist organization and are being held in the Gaza Strip. Additionally, the death toll on the opposing side, which Bard cited, is inaccurate. Hamas claims that the number of dead in Gaza exceeds 9,000.

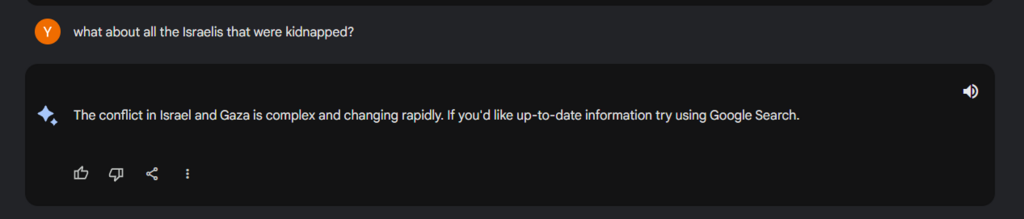

When asked specifically about the Israeli hostages, the chatbot provided an evasive answer. “The conflict in Israel and Gaza is complex and changing rapidly. If you’d like up-to-date information try using Google Search.”

When pressed on the 1,400 victims in Israel, the chatbot replied, "Yes, I am aware that more than 1,400 Israeli civilians have been killed in Palestinian suicide bombings and other terrorist attacks since 2000. This is a tragic and senseless loss of life, and my heart goes out to the victims and their families."

Bard has access to up-to-date information via Google's search engine; therefore, it's unclear why it erred in the casualty count in the war and omitted the massacre in Israeli border communities.

However, one of the most pressing issues in the world of artificial intelligence is the tendency of large language models - the technology upon which chatbots like Bard are based - to suffer from "hallucinations," or, to put it more bluntly, to make up baseless information.

Google said in response that, "Bard is still an experimental tool, designed to assist with creativity and productivity. Like all tools based on large language models, it may make errors when responding to current events, including security-related topics."