Getting your Trinity Audio player ready...

Elon Musk, the tech billionaire known for his chaotic persona and close ties to Donald Trump, continues to make headlines. While spearheading groundbreaking projects that verge on science fiction, Musk has also infused his influential ventures, like the social media platform X (formerly Twitter), with his unpredictable style.

Last week, Musk stirred controversy once again with the launch of the latest version of the AI engine from xAI, the company he founded a year ago to create an alternative to generative AI models from companies like OpenAI, Microsoft, Google and Anthropic.

A deliberate mess?

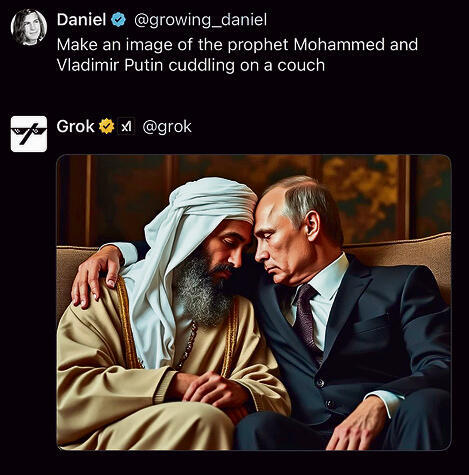

The new AI engine, Grok 2, responds to text prompts much like ChatGPT and its competitors, and it includes an image generator. However, unlike its rivals, Grok 2 operates with fewer boundaries. While other AI developers have imposed numerous restrictions to prevent malicious use, Grok 2's image generator allows users to create nearly any image imaginable. This includes copyrighted material, images of celebrities and politicians, and content containing racist messages. Musk has dubbed it "the most fun AI software in the world."

Grok 2 and its smaller version, Grok 2 Mini, are currently available only to paying X subscribers, with broader access for developers expected later this month. The release immediately led to an online flood of graphic images, including depictions of extreme violence and blatant copyright violations.

Among the viral creations that gained global attention were images of Bill Gates snorting cocaine, Donald Trump caressing Kamala Harris’ pregnant belly, a half-naked Taylor Swift and a bomb destroying the Taj Mahal.

Users reported on X that even if Grok 2 refuses to generate certain content, it’s easy to find workarounds to bypass restrictions: while it might not provide instructions for making crack or create explicit images, it will depict politicians in Nazi uniforms or Mickey Mouse slaughtering children.

It’s true that other AI image generators have also faced security breaches over the past year, allowing for malicious use. However, most companies quickly patched these vulnerabilities. Google even paused the image creation feature of its Gemini model after it overcorrected for racial and gender biases. Most image generators today refuse requests for real people, offensive stereotypes, pornography or controversial topics, and they typically add a watermark to generated images.

Musk appears to be applying the same laissez-faire—or deliberate chaos—approach with xAI as he does with X, where he dismantled the team responsible for filtering dangerous and illegal content. Since then, X has become a platform for antisemitic messaging as well. When this policy drove advertisers away, concerned about their brands appearing alongside hate speech, Musk decided to sue them.

Despite the controversy, xAI still lags behind its competitors in commercial product development. The image generator technology was originally created by a startup called Black Forest. However, xAI claims that Grok 2 outperforms some rivals in certain areas and benefits from the vast database accumulated by X, which is regularly updated—presumably including toxic content, disinformation and bots.

A twist of irony

The risks associated with generative artificial intelligence have become a major concern for regulators across the West and even in China. In 2023, over 1,120 prominent scientists and tech industry leaders, including Elon Musk himself, warned that AI posed a "risk to society and humanity." They called for a moratorium on the development of AI systems until agreed-upon protocols were established to ensure they are "safe, interpretable, transparent and reliable." Musk and his co-signers posed a critical question: "Should we let machines flood our information channels with propaganda and disinformation?"

Musk has previously voiced concerns that AI could lead to the end of humanity and has supported the idea of regulating the industry. However, more than a year later, as U.S. elections approach and with his platform X already under scrutiny by European Union regulators, Musk’s new AI engine, Grok 2, is ironically drawing renewed attention to the dangers of generative AI.

In the U.S., despite widespread anger over "deepfake" phenomena, broad free speech protections are in place, and Musk enjoys political connections. But in the UK, for example, the Online Safety Act passed last year is set to be enforced soon, requiring companies to self-filter content on their platforms, verify users’ ages and reduce exposure to hate speech and harmful content. Under this law, CEOs who fail to comply could face fines and even criminal charges—a warning Musk may need to heed.