Israel’s Fighting Online Antisemitism (FOA) organization recently reported that social media platforms don’t make efforts to address the influx of content from Hamas’ attack on October 7, support of the terrorist organization, and encouragement of antisemitism.

More stories:

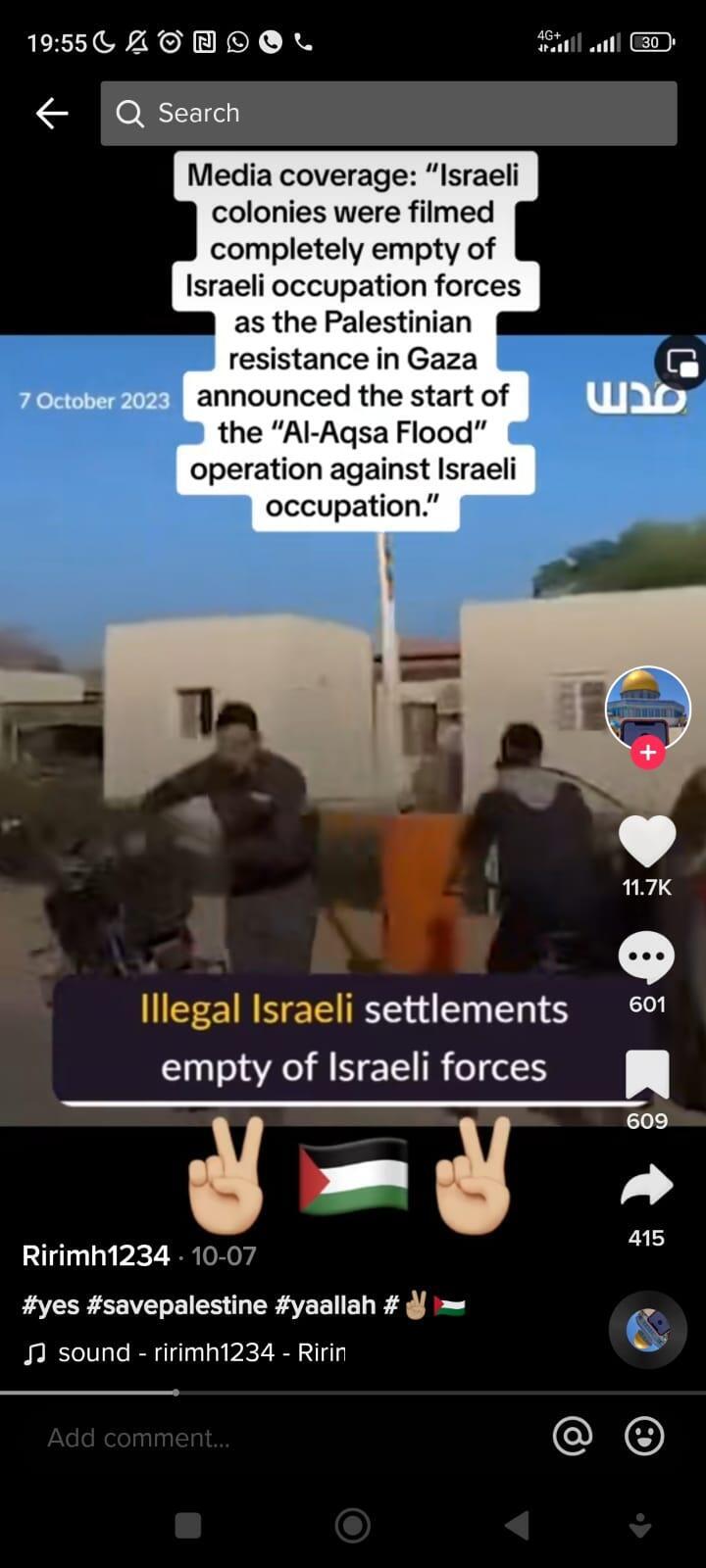

According to the movement, while reporting content on TikTok, and unlike reporting on other platforms, including Facebook, X (formerly Twitter), Instagram, and YouTube, there’s no explicit option for marking the content as dealing with terrorism.

"One of the facts that makes TikTok a fertile ground for incitement and terror content is the inconsistency in removing content," explained Barak Aharon, a monitoring director at FOA. "Therefore, it is difficult for us to understand the method regarding content removal in the platform. Most of the content we’ve seen on TikTok isn’t direct, such as people inciting terror. Such content isn’t always immediately removed by TikTok, but is removed after our direct appeal to them."

Is there an example of such a video?

"One of the association's volunteers reported a TikTok video in Arabic praising Hamas leaders and calling to join the fight to conquer the land, until the occupation of Jerusalem. TikTok responded that the video did not violate its community guidelines. The movement spoke with its contacts on TikTok requesting for a reexamination of the content, and the video was removed after a few days.”

“Unfortunately, this is just one of many similar videos, and the association doesn’t have the resources and budgets to deal with such a large amount of content flooding these platforms," he explained.

Following the outbreak of war, FOA has established a volunteer-based taskforce numbering thousands, dedicated to identifying antisemitic, anti-Israeli, fake, and terror-inciting content with the goal of reporting and removing them from various social media platforms in various languages.

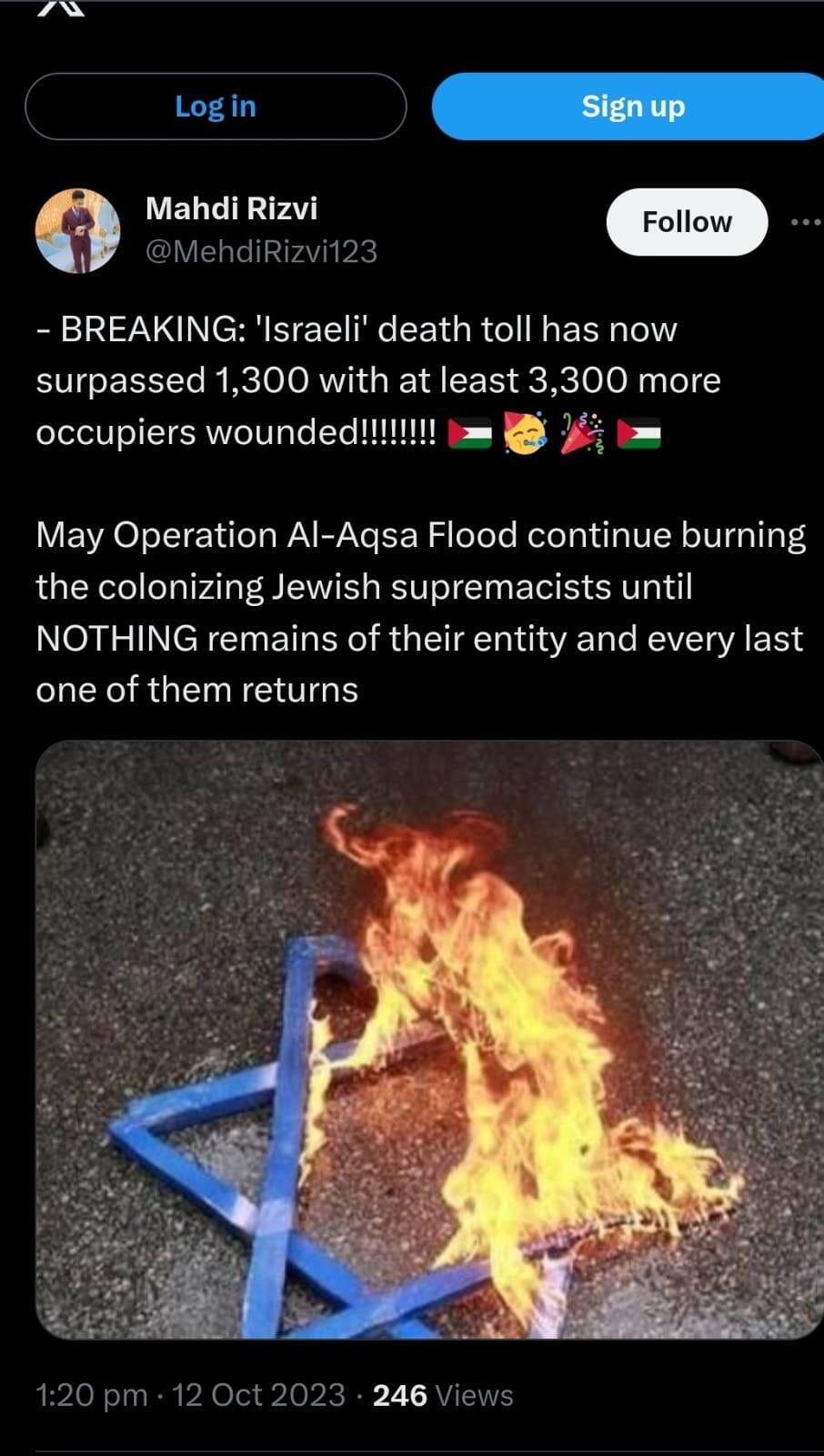

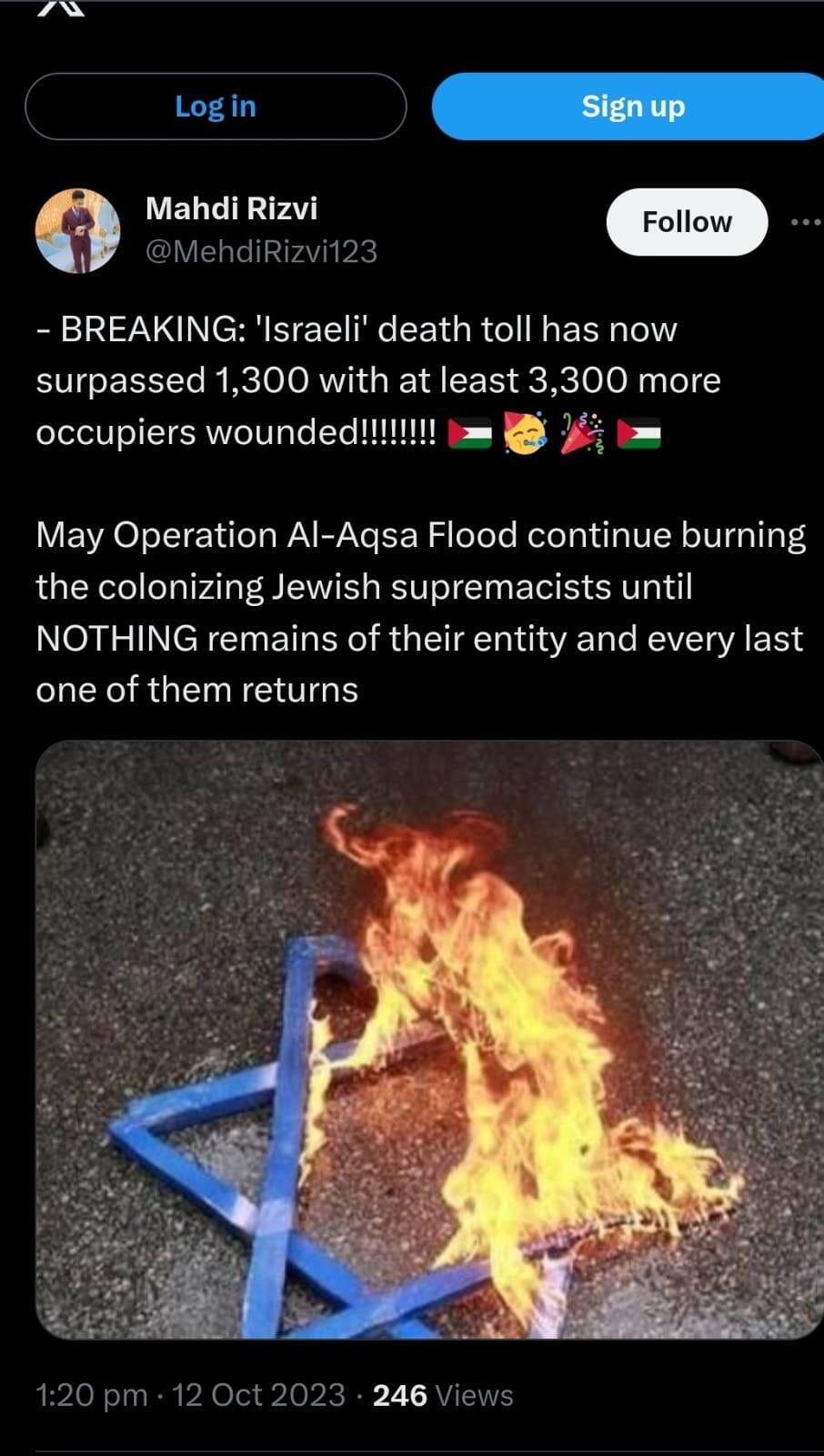

"The most significant increase has been observed on X, where the antisemitic content includes, among other things, threats to Jewish lives, incitement to hatred and violence, hate speech, and even outright denial of Hamas’ massacre," said Tomer Aldubi, FOA’s head.

"Unfortunately, social media policies still don’t address all content relating to the attack. For example, on Meta, content denying the massacre isn’t removed unless it explicitly supports the terror organization."

Meta didn’t respond to the comments, and X does not typically respond to media inquiries. TikTok responded in a statement: "TikTok’s reporting options don’t include all of our community guidelines categories, as they’re too numerous. Those that exist present the main categories, under which various subcategories are classified, including 'terrorist activity.' Any report received in the system is examined according to the category it is reported under but may also be classified according to other categories for violation of our community guidelines."

2 View gallery

Antisemitic content on social media

(Photo: Screengrab, Fighting Online Antisemitism)

On Tuesday, TikTok announced that between October 7 and October 31, it had removed more than 925,000 videos in the Israel, and Gaza regions for violating its policies on violence, hate speech, misinformation, and terrorism, including content promoting Hamas. "TikTok has a strict policy against hate speech, including antisemitism and Islamophobia, which have no place on the platform," the statement added.

So far, 730,000 videos have been removed worldwide for violating community guidelines related to hate speech, and over 50,000 videos containing misinformation have been taken down. Additionally, more than half a million comments made by bots under hashtags related to the war were removed.