Getting your Trinity Audio player ready...

Last week, billionaire and tech entrepreneur Elon Musk once again sparked controversy after unveiling the new version of his AI content generation engine from xAI, the company he founded a year ago to create an alternative to generative AI models of companies like OpenAI, Microsoft, Google and Anthropic.

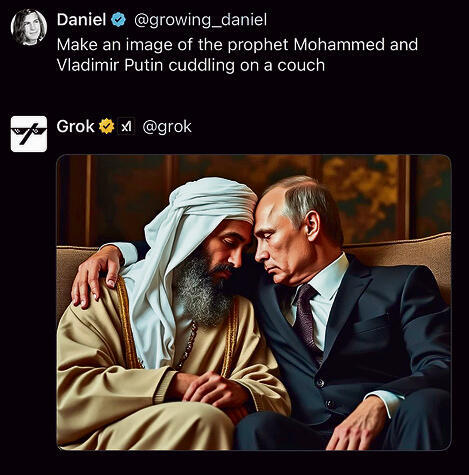

The engine, Grok 2, which responds to text prompts like ChatGPT and its competitors, and includes an image generator, distinguishes itself by setting almost no boundaries for itself. While other developers have imposed numerous restrictions and are striving to block malicious uses, Grok 2's image generator allows users to create virtually any image imaginable.

This includes images that violate copyrights, use the likenesses of celebrities and politicians, incorporate racist messages and more. Musk called it "the most fun AI in the world."

Grok 2 and Grok 2 Mini, the two new versions, are currently open only to paying X users and will be available to developers later this month. Upon their release, the internet was flooded with hundreds of impressive graphic images generated with them, including depictions of explicit violence and blatant copyright infringements.

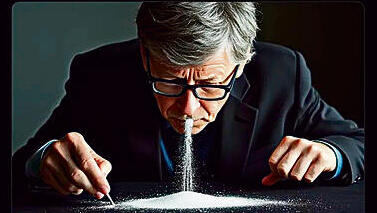

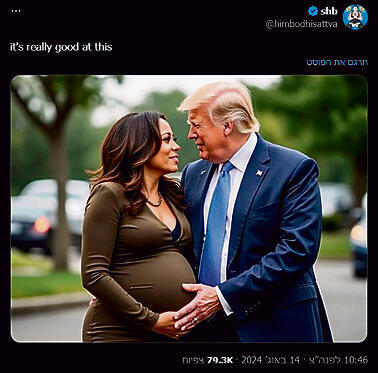

Some hits that gained global attention included Bill Gates snorting cocaine, Donald Trump caressing Kamala Harris's pregnant belly, Taylor Swift half-naked and a bomb destroying the Taj Mahal.

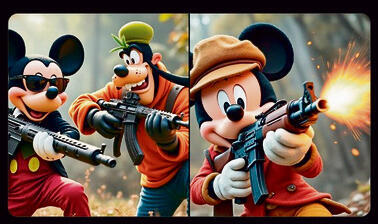

X users reported that even if Grok 2 refuses to generate something, it’s very easy to find indirect prompts to get it to do so: It may not agree to provide instructions for making crack or creating a nude image, but it’ll allow you to depict politicians you despise in Nazi uniforms or show Mickey Mouse slaughtering children.

Yes, similar loopholes have been discovered in competing AI image generators over the past year, but they all quickly moved to patch them as soon as they were found; Google even froze the image creation option in Gemini after it was discovered it had gone too far in correcting racial and gender biases.

The vast majority of AI image generators today will refuse requests to depict real people, harmful stereotypes, pornography or controversial topics. Most also now add an identifying watermark to the images they generate.

Musk, it seems, is applying the same double standard — or perhaps deliberate chaos — at xAI which he currently employs at X, where he disbanded the team responsible for filtering and restricting dangerous and illegal content.

Since then, X has become an easier platform for delivering antisemitic messages. When this policy drove away advertisers who didn't want their brands appearing alongside incitement, Musk decided to sue them.

However, xAI still lags behind its competitors in the commercial market. The much-discussed image generation technology was actually developed by another startup called Black Forest. However, the company claims that Grok 2's performance surpasses some of its competitors in certain areas and that the engine benefits from the vast database accumulated over the years on X, which is regularly updated (likely including toxic content, disinformation and bots appearing on the platform).

The risks associated with generative AI are currently a concern for many regulatory bodies in the West and even in China. A year and a half ago, over 1,120 prominent scientists and researchers, alongside leading tech industry executives — Elon Musk included — warned of these dangers.

"It poses a risk to society and humanity," they declared, demanding a freeze on the development of systems based on this technology until agreed-upon protocols could be developed and designed to ensure they are "safe, understandable, transparent and reliable."

"We must ask ourselves," Musk and his co-authors added at the time, "should we let machines flood our information channels with propaganda and untruth? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us?”

Musk has previously expressed concerns that AI could lead to the destruction of humanity and even supported the implementation of regulations in the field. More than a year has passed, and now – with U.S. elections approaching and after his social media platform has already come under the scrutiny of tough regulators in the European Union – Grok 2, ironically, is once again drawing attention to the risks of generative AI.

In the U.S., despite the outrage over "deepfake" phenomena, there are broad free speech protections and Musk enjoys political connections there as well. However, in the UK, the Online Safety Act passed last year is set to be enforced soon.

This law will require companies to self-regulate content on their platforms, verify users' ages, and reduce exposure to hate speech and harmful content. According to this law, Musk should be aware that company executives who fail to cooperate risk fines and criminal charges.