Fake news, conspiracy theories, graphic videos, hate speech and bot armies. "Chaos," is how Yossef Dar, co-founder and chief product officer at Cyabra, describes what has been happening on social media since the war with Hamas began last Saturday.

More stories:

Cyabra, which monitors threats on social media, examined about 734,000 posts and comments about the war posted from approximately 450,000 accounts on Facebook, X (formerly Twitter), Instagram and TikTok in recent days. "We're mostly seeing active participation of fictitious users pushing narratives" Dar explains.

7 View gallery

Reports of war against Hamas on social media described as 'chaos'

(Photo: Image processing/ Thaspol Sangsee / Shutterstock.com, MAHMUD HAMS / AFP)

The company examined 162,000 accounts that have been taking part in the discourse and checked their authenticity. The findings are alarming: Over 40,000 accounts, making up about 25% of the accounts examined, are fictitious.

"The fake accounts tried to dominate the discourse, circulating more than 312,000 postings and comments over a two-day period," the company stated in its report. The content generated by the fake profiles has received more than 371,000 engagements (total comments, likes and shares) and its potential reach is estimated at 531 million accounts.

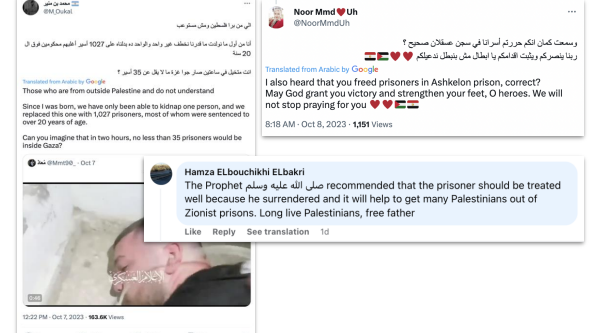

Cyabra identified three narratives pushed by the fictitious accounts: the claim that the abduction of Israelis to the Gaza Strip will lead to the release of Palestinian prisoners from Israeli prisons; efforts to improve Hamas' international reputation by showing a video of an Israeli woman with her two children, but the "Palestinian soldiers" not physically harming her; attempting to justify the massacre near Gaza by citing violence by Israeli security forces against worshippers at the Al-Aqsa Mosque.

Can you tell who’s behind these bots?

Dar: "That’s not our job, but I can tell you that campaigns of this nature and magnitude are not funded by a single organization – it looks like there’s a state behind this."

What else have you been seeing on social media over the last few days?

"We see bad discourse and malicious content. But we have to be honest and say that there’s also a great deal of support for Israel. Some of the profiles we examined are well disposed toward Israel, including profiles from overseas. We’ve not seen this before. In no other operation have we seen such positive feeling toward the country."

Israeli startup CHEQ, which fights online bots, is also currently closely monitoring social media. "The situation over the past few days is shocking and repulsive," the company's CEO, Guy Tytunovich, told Ynet. "We’re dealing with monsters. Hamas distributes horror videos to influence opinion and erode deterrence. You see horrendous posts with hundreds of thousands of hearts and likes. “

Tytunovich estimates that, in total, hundreds of thousands of bots are echoing anti-Israel messages online. He assesses that Iran is behind many of them, with others being operated by Hamas, Islamic Jihad and Hezbollah. Some of these bots are spreading a conspiracy theory about "traitors from within" who aided Hamas – a theme also echoed by Israeli users. "The goal is to create a rift in the nation and bring down morale, to make us feel like we have no chance," he explains.

Tytunovich told Ynet that CHEQ has taken on promoting Israeli hasbara. The company reports fake accounts it identifies to social networks, and operates bots spreading pro-Israel messages on Telegram.

"On Telegram, there is no moderation, no law, nothing sane, nothing," says Tytunovich. "In pro-Hamas or anti-Israel groups, when they celebrate with horrific videos, it's worth sending them videos that show what cowards they are." Ynet has learned that there are also bots spreading similar messages on X (formerly Twitter), Instagram, TikTok and further platforms.

Is there any difference between the various platforms in terms of content?

Tytunovich: “Horrific content and the erosion of Israeli deterrence is mainly on Telegram. Bots and fake news is primarily on X, but also on Instagram. TikTok, where it’s harder to get on videos with horrific content, is awash with all kinds of bots – but there’s a lot of fake news there.

X deserves much of the credit for the current online chaos. Dar says that most of the conversation about the war takes place on this platform and, according to X itself, 50 million posts have thus far been published on the subject. On Monday, X's safety team announced that the company had decided to focus on protecting the conversation about the war by enforcing its Community Standards, including deleting hundreds of accounts that have tried to create manipulations.

One important account responsible for disinformation about the war that has not been deleted belongs to X owner himself, Elon Musk. The world's richest man advised users to follow two accounts to help them track events in real-time, but vigilant users and journalists noticed that both accounts had previously shared an unauthentic photo, and that one of them had made antisemitic remarks.

Musk was forced to delete the post. On another occasion, Musk responded to a post, claiming that "we saw more images in the mainstream media of the war in Israel in two hours than of the war in Ukraine over two years." Musk wrote in response to the post "Weird” - echoing this dubious claim to hundreds of millions of users.

But Musk definitely isn’t the only one spreading disinformation on X. Platform users share images from previous conflicts and even from video games, posting them as authentic accounts of the war. Three hundred thousand people viewed a video claiming to document Israel dropping phosphorus bombs on Gaza - but it became apparent that the video was, in fact, from the war in Ukraine. Other posts, published by verified Twitter accounts, claimed that Israel destroyed the Church of St. Porphyrius in Gaza – perhaps in an attempt to turn the Christian world against Israel. The church itself has denied it.

The situation at X is so worrying that the European Commissioner for Internal Market, Thierry Breton, has written to Musk saying there were indications that his platform was being used to spread illegal content and information in the EU about the war. Breton demanded that Musk get back to him within 24 hours regarding steps taken to address the crisis and warned that the EU could impose fines on X. Meanwhile, researchers claim that in recent months the platform has disabled an internal tool helping combat disinformation.

Tytunovich notes that, for 18 months now, "Elon Musk has been talking about the bot problem on Twitter, but he still hasn't solved the problem." He says that even apparently verified X accounts with a blue check next to them may be bots, as this verification badge can be purchased for a fee. "It's not very economic, but for a country like Iran it's not very expensive to spend 8 million dollars on a thing like this."

Dr. Gilad Leibovich, Technological Courses Academic Manager at the Technion - Israel Institute of Technology, notes that it’s not just ideological motives behind the spread of fake news and conspiracy theories about the war. As we saw with COVID-19, the war in Ukraine, and countless other crises in the past, there are people who are taking advantage of the situation to conduct scams targeting people affected by the situation. Others simply want traffic for attention or financial gain.

"You can now find everything on social networks," Leibovich concludes. "Everything from private fraud, using old media from past events or unrelated contexts to extract financial profit or garner viewers and generate shares through to large number of accounts suddenly 'popping up' for propaganda purposes. It’s on both sides. There’s now also a push from the Israeli side to spread their message – not fake of course."

"This chaos has meant that we’re only now seeing the big companies starting to become aggressive about content monitoring." Leibovich adds "The main problem is the reach, that even the big companies find hard to address. The algorithm’s there, the technology exists, but the scope of the posts is so extensive that by the time things get moving, the filtering is almost ineffective." Leibovich explains that it's much harder to curb fake news when it comes to messaging apps such as WhatsApp and Telegram, which have fewer content-filtering technologies.

Is there anything the average user can do to ensure they’re exposed to reliable information, or is it a lost cause?

First, yes, it is a lost cause, but there are definitely things that can be done. All the posts we see with endless shares are prone to disaster. In information and cyber security, we aim to avoid being part of some kind of electronic army, as these are usually motivated by unreliable interests. Sometimes they're in your favor, but sometimes they’re against you. So, the best thing I can suggest is just not to be part of it. When we will look back, we'll identify the propaganda and we want to have been on the side that wasn't part of it."

Another problem on social media is the horrific videos of the Hamas attack on IDF bases and communities near the Gaza Strip. These videos are distributed unrestricted on Telegram, whose terms of use are rather lenient, but they’re also popping up on accounts on Instagram, TikTok and other platforms. They’re spread by both sides – both by Hamas supporters wanting to glorify the terrorist organization, and by Israelis and pro-Israel accounts wanting to show the atrocities to the world.

We forwarded to Meta a series of links to Instagram posts with videos documenting the abductions of Israelis and the desecration of corpses, asking for an explanation as to why they were posted on a platform whose user policy explicitly states that it forbids content glorifying violence.

Meta’s response was evasive: "We have set up a task force dedicated to the situation, with global teams including Arabic and Hebrew speakers who are closely monitoring the situation. We're focusing on enforcing our rules to keep the community safe, while ensuring that people can continue sharing what’s important to them."

TikTok has also been a cause for concern over the past days, in light of the difficult videos and fake news circulating on that platform. Yesterday, Israel’s Parents Association issued a call to block the app in order to prevent children from accessing difficult or shocking content. However, we have also received a complaint that the platform is not allowing people to upload legitimate news videos about the war.

TikTok’s response: "As always, and more so since the beginning of the war, we’re regularly monitoring content on the platform that threatens the safety of our community, while working to remove content encouraging offensive and violent speech or that contains incitement or misinformation. We are constantly monitoring events as they unfold. We have allocated resources and will allocate further resources as necessary to maintain TikTok as a safe space."

Telegram did not respond to Ynet's request for comment. X generated an automated response: "Busy at the moment, please check back later." At X, they seem to have more urgent things to deal with right now.