Getting your Trinity Audio player ready...

Researchers from Google, Tel Aviv University, the Weizmann Institute and the Technion presented a new text-to-video AI model called Lumiere, which portrays "realistic, diverse and coherent motion." Currently, this is only regarded as a study, since Google has not released the model nor has it shared if it intends to do so in the future.

Read more:

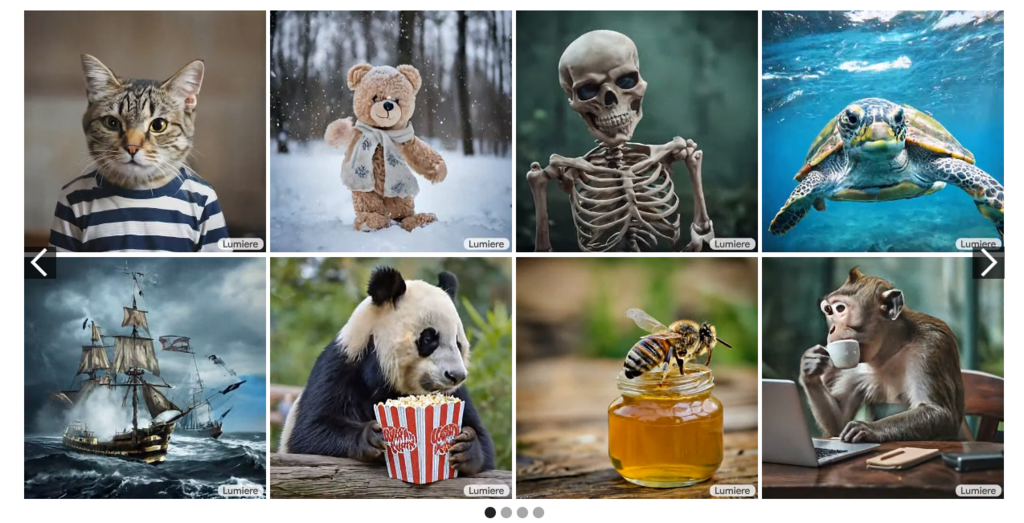

Lumiere, named after the innovative Lumiere brothers, allows you to create five-second videos with a resolution of 1024x1024 based on prompts which then animate existing images. It can also create animations in different styles (such as watercolor, sticker, etc.) according to a reference image that will be shown to it, and change the style of the video. For example, turn a realistic character into a cartoon, or one made of flowers, wood, etc. Additional features include completing missing parts in the video (inpainting) and expanding its frame (outpainting).

Video creation using artificial intelligence is a relatively new field, but it has been gaining momentum in recent months. Tools such as Runway, Pika and Stable Diffusion already exist on the market. Just last month Ynet posted a video of Ismail Haniyeh rapping which was created using one of these tools. However, the researchers behind Lumiere claim that the existing models on the market are limited in terms of the length of the videos, the quality of the image and the realism of the movement they can produce.

According to Google's researchers, they developed a more efficient method of processing all the video frames at once, as opposed to the tools on the market that create several central frames and then fill in the missing information between them. The researchers even made a comparison with the existing tools on the market, which shows that Lumiere provides higher quality products, longer and with higher mobility.

Along with the academic article published regarding the new model, the researchers uploaded a demonstration video that looks quite impressive, although it is difficult to know if the products that appear there reflect the real capabilities of the model or if they cherry-picked the videos.

The researchers point out in their article the limitations of Lumiere: the model is not able to produce videos with different shots, or ones that include a transition between different scenes, as achieving coherent video rendering has proven to be a challenge for contemporary AI models. The researchers also point out that there is fear of the technology being misused, and call for the development and implementation of tools that will identify biases and malicious use in order to ensure safe and fair use.

Dr. Tali Dekel from the Weizmann Institute and Google, one of the researchers behind the new model, explained that there are significant challenges in creating videos using artificial intelligence. "The amount of data is much larger since space and time do not behave in videos like they do in photos. For us to see a very significant breakthrough in the field, there will have to be much deeper work here on how to process information in time, represent movement and edit out the excess information that is in the video. But I'm sure it will happen," she said.

The work on Lumiere has been led for the past six months by a research group at the Google Research and Development Center in Tel Aviv under the direction of Inbar Mosari, which includes alongside Dekel researchers Omer Bar-Tal, Hila Chefer, Omer Tov, Charles Herrmann, Roni Paiss, Shiran Zada, Ariel Ephrat, Junhwa Hur, Yuanzhen Li, Tomer Michaeli, Oliver Wang and Deqing Sun, who worked on the model.