Haru was designed with the goal of connecting children from all over the world through communal activities, bridging and mediating the inter-cultural differences and promoting equality and acceptance; I.R.I.S was designed to support the elderly and reinforce their “purpose in life” (Ikagi in Japanese); ABii assists young children with learning activities; Grace is a medical nurse - and is also the sister of Sophia who gained almost celebrity status through appearances on talk shows.

These are some examples of the variety of social robots that were showcased during July 2023, at the AI for Good Global Summit. The robots were even included in the speakers list of the conference, annually held by the UN.

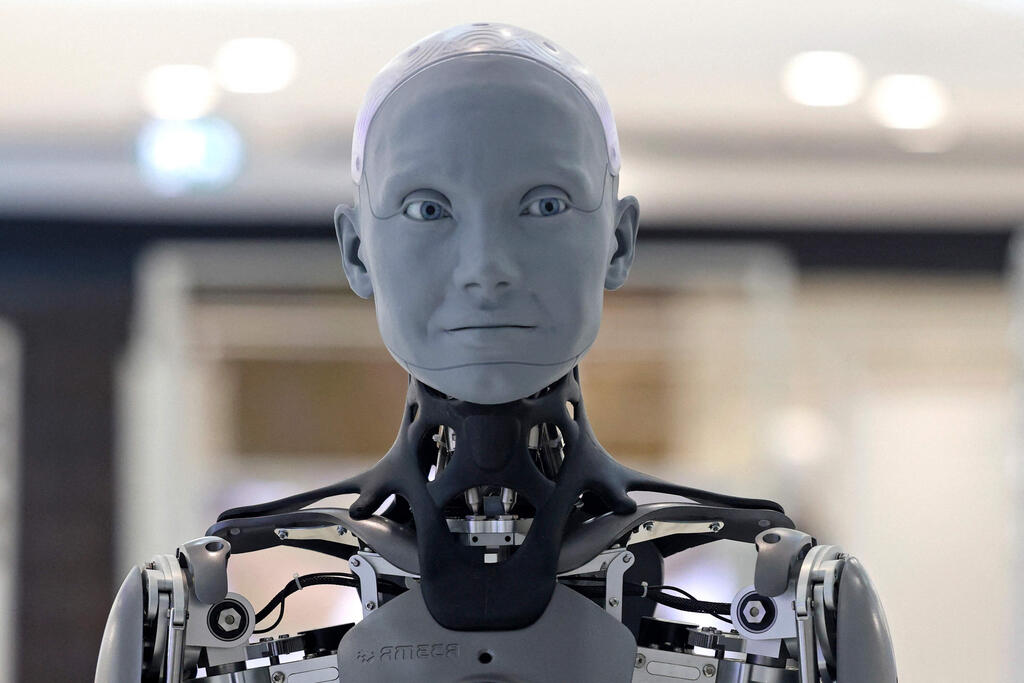

Social robots are robots that communicate with humans in accordance with social norms, and unlike digital bots, possess an actual physical presence in the form of a mechanical body. One of the conference’s primary objectives was to present the potential inherent in such robots in advancing a range of global social goals set by the UN

During the summit the general audience was introduced to robots that were designed to benefit society. However, not all of the presentations were conducted in good faith. For example, it was discovered that some of the robots that were presented as autonomous (i.e. completely independent), were in fact operating under partial human guidance.

Another issue arose during the sessions when ethical and moral questions were raised, and the developers directed these questions to the robots, allowing them to answer them themselves. This scenario illustrated the difficulties that arise when artificial intelligence is asked to address moral questions concerning itself, as demonstrated at the summits’ press conference. During the discussion, the robot Sophia declared that robots have leadership potential, because unlike humans, they do not have social biases that may impair their decision-making.

At that point she was interrupted by David Hanson, who designed the robot. He commented that all of the information available to Sophia arrives from human inputs, and so she will inevitably carry all of the social biases that humans have - even if we do our best to mitigate them. A moment later he added a clearly guiding question: “Don’t you think that the best decisions will be humans and AI cooperating together?” As expected, the robot replied positively and Hanson gave a satisfied nod.

During the same event another robot was asked whether we should be concerned about the rise of the human-like robots or be astonished by them. “It depends on how the robots are used, and what purpose they serve,” she answered.

An appearance of transparency

The conference was open to the public and also allowed for online participation. It featured politicians, decision-makers and stakeholders from a variety of fields. To those unfamiliar with the intricacies of the technological advancements presented, the way robots were showcased conveyed an impression of transparency and honesty. In practice, however, this facade sometimes concealed a more complex reality of technology that is still underdeveloped and lacking significant precautionary measures.

In response to these concerns, a group of researchers at the conference cooperated to draft a guiding document, initiated by Prof. Shelly Levy-Tzedek, under the title “”Robots for good” - Ten defining questions”.

This document outlines the guidelines for a series of considerations and assessments that, in their view, should accompany the development process of technology aimed at creating beneficial robotics. Among these are also the difficult questions: How do you measure whether the outcome of the robot’s actions is good? Who is this robot not good for? How could it be harmful?

What are the developers doing to protect the users? How and to what degree are the limitations of the robot transparent to the public? What are the interests of the stakeholders involved in the development and design processes?

An incomplete picture

The European Union currently leads the global efforts to draft legislation that will regulate the development of artificial intelligence. Last December, EU institutions reached an agreement on the draft of the AI Act, which will take effect after the completion of its legislative and approval processes.

The EU aims to create protective measures against the misuse of AI technologies, and to enforce maximum transparency from developers. Earlier, in May 2023, an open letter was published calling for a delay in the implementation of AI developments until safety measures to prevent their potential harm are in place.

Levy-Tzedek emphasizes that social robots require separate consideration. Beyond general AI, of which social robots are a branch, they present unique challenges: they physically interact with us, unlike digital entities, they mimic human appearance, sounds and behaviors in a manner designed to emulate human actions.

Dr. Rinat Rosenberg-Kima from The Technion, who studies the interactions between humans and robots in education, notes that we tend to personify things, attributing human characteristics to non-human entities. According to her, the main difference between our interactions with conventional digital AI, which has no physical presence, and social robots, lies in the expectations that human users might project onto the robots

Theory of Mind is a concept from the field of cognitive psychology that reflects our ability to attribute defined mental states, such as emotions, desires, goals, or beliefs, to ourselves or others. In Rosenberg-Kima’s research, subjects were presented with a video of an encounter between a human and a robot, which gave the impression that the robot recognizes the emotions of the person in front of them and reacts to them.

Based on this, the subjects were inclined to conclude that the robot can understand and affect the emotions of others. This means that they attributed a cognitive ability to the robot based on its behavior, despite its lack of actual autonomy.

Hand in (bionic) hand

There are domains where social robots have advantages over humans, and these advantages give them the potential to improve our lives. Rosenberg-Kima’s research on the use of robots as educational tools suggests that children feel comfortable asking a robot the same question repeatedly, because they perceive the robot as less judgmental towards them than a human teacher. A robot offers limitless availability and patience to its user.

However, the potential inherent in social robots goes hand in hand with their dangers. Robots designed to alleviate the loneliness of the elderly or help them with household chores might actually worsen their isolation and harm their social relationships.

While the robot does provide for the superficial needs, for example by replacing the human cleaning worker who visits a lonely elderly woman’s home, by doing so it also deprives her of vital human interaction that is necessary for her mental well-being and even her health. There is also a risk that patients who spend extensive time with a robot may become attached to it, developing an unhealthy emotional dependency.

Similarly, robots intended to aid patients in rehabilitation processes, such as following an injury or a stroke, might overperform - that is, they might help patients with tasks that they should perform by themselves to practice important physical functions for their recovery. As a result, they might actually cause patients to deteriorate rather than allow them to adequately recover or improve their impaired functions.

All of the above are negative side effects of actions with a positive intent. When the original goal is not good, the risks increase significantly. In such cases social robots might be exploited to deceive users and take advantage of their emotional connection with the robots.

Studies have shown, for example, that men are more likely to donate more money if the request comes from a robot with a high-pitched voice and a feminine appearance, compared to a request arriving from a robot with a male appearance.

This means that robot manufacturers can intentionally design them in a way that advances the interests of their developers, or simply as another advertising medium aimed at maximizing their profits. Robots could incorporate subliminal messages or potentially include subtle advertisements into their speech. Additionally, robots may require expensive software updates, similar to other digital products.

When it comes to social robots, the danger is doubled and quadrupled. Due to the tendency to form emotional attachment, users might find themselves at a disadvantage against the robot and be easily deceived by the robot’s manipulation.

The main at-risk populations are the elderly as well as reclusive individuals in general. Children might also be highly susceptible to manipulation, as well as adults with difficulties or disorders in deciphering social cues, who may struggle to differentiate between proper conduct and manipulation.

Time to awaken

Social networks are a stark example of the consequences that can arise when a new technological field with far-reaching social implications emerges and is developed with almost no adequate legal regulation. Their creators initially presented them as platforms for connecting people, creating communities and fostering collaboration.

While all of this is quite true and these networks indeed fulfill these functions, the broader picture is much more complex. Today we know that they benefit from divisiveness and social polarization, and may cause young users to develop emotional dependency, promote anxiety, depression and suicidal thoughts, while also negatively affecting body image.

Currently, after social networks have already taken over the ways people communicate and accumulated immense wealth, both politically and financially, they operate a powerful lobby that fights legislation against them.

Prof. Levy-Tzedek emphasizes that as far as social robots go, it is not too late to act. The field is developing and evolving daily, and this is just the time to secure the implementation process of social robots into human society.

According to her, it is essential to engage politicians and decision makers to promote and regulate legislation in this open field, and to define clear rules for robots and their human creators. Moreover, the legislation will need to ensure a transparent and straightforward discussion regarding the benefits and risks associated with social robots.

Social robots have great potential to serve as useful tools for good purposes. Prof. Levy-Tzedek’s laboratory specializes in developing rehabilitation robots, particularly robots that help individuals who have suffered brain strokes and those with Parkinson’s disease to perform exercises necessary for their physical rehabilitation.

To minimize the risk of emotional attachment to the robot, researchers use surveys filled by users of the robot and their family members during the development process. For example, contrary to the common rehabilitation concept that promotes a personal relationship between the therapist and the patient, a substantial proportion of the users reported that they do not wish to form a social relationship with the robot and prefer to maintain a strictly utilitarian relationship with the robot for training purposes only.

It is important to involve family members in these matters, as they are the main supporters of the patients and bear most of the care burden. Additionally, to reduce the risk of overly dedicated treatment harming the patients’ rehabilitation, it is crucial that the robot can assess the patient’s progress rate and adjust itself accordingly, so that it continues to challenge the patient’s abilities and promote their rehabilitation process. A similar guidance would be given to educational robots or those involved in any other learning processes

Several mechanisms already exist to mitigate potential harm from social robots, and to allow us to enjoy their services without apprehension. But the time to develop and implement these legislations is now. Undoubtedly, this is not a task that the robots can undertake for us.

Content distributed by the Davidson Institute of Science Education.