Getting your Trinity Audio player ready...

Since the October 72023 Hamas massacre, which marked one of the deadliest days for Jews since the Holocaust, antisemitic speech has spread online like wildfire. Telegram, known for its stringent privacy features and minimal content moderation, has since become a bastion for groups that incite violence against Jews and the Jewish State.

A newly released report by the Anti-Defamation League (ADL) tracked a sample of 793 channels/groups and 7,769 users, representing those who posted at least one violent antisemitic or anti-Israel message between April 2023 and 2024. The analysis found that on the day of the attacks antisemitic posts surged by 433.76%, skyrocketing from an average of 238.12 to 1,271 daily. While this spike has diminished, the current average of 321.22 daily posts remains significantly higher than pre-attack levels.

Antisemitic conspiracy theories spreading across various platforms include false accusations that Israelis harvest organs from Palestinians, accusations that Jews were responsible for the 9/11 attacks, that Jews control the media and the U.S. government, that Jews promote the "LGBTQ+ agenda," and denying or distorting Israel's role in the October 7, 2023, attacks, falsely suggesting Israel orchestrated the violence.

The influence of antisemitic content is measured by the volume of posts and user engagement, particularly by how frequently these posts are shared. Analysis by the ADL revealed that, before October 7, an average of 12.18 unique users forwarded extreme antisemitic posts weekly and this surged to 20.96 after that date. Additionally, the weekly forwarding of violent antisemitic and anti-Israel messages rose from 204.03 to 346.4.

Daniel Kelley, director of Strategy and Operations and Head of the ADL Center for Technology & Society, discussed the report's concerning findings.

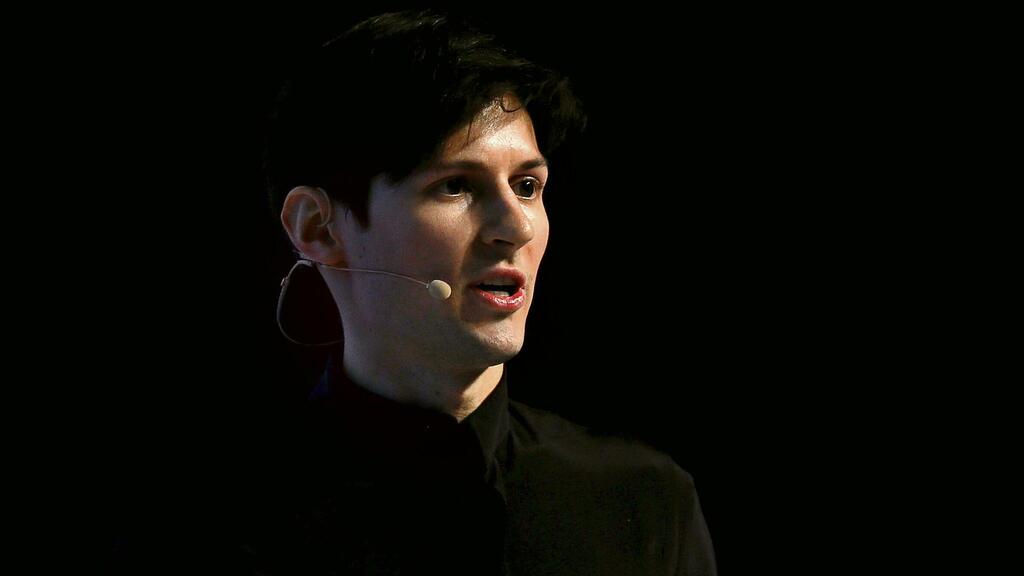

“After the arrest by French authorities (of Telegram CEO Pavel Durov) earlier this year, Telegram started sharing some data with governments, which they hadn’t done before. However, they haven’t changed their core rules,” Kelley said.

“They did add one rule to their terms of service, which says if something is illegal in many countries, they will take action. But they still don’t have fundamental content moderation measures in place. Telegram doesn’t prohibit hate speech or have rules against extremism or support for extremists,” according to Kelley.

Although many experts consider these basic standards and platforms try to enforce them, Telegram seems hesitant to acknowledge that hate against marginalized groups isn’t acceptable on their platform, even in light of its recent attempt to prohibit notoriously illegal content.

Arik Segal, the founder and CEO of Conntix, a consultancy facilitating effective and constructive communication on virtual platforms, highlighted how and why Telegram’s privacy changes won’t have the desired effect.

“I don't think the new privacy policies will have any impact. First, I believe that most people are not aware of the changes. Second, people don't see hateful messaging as a crime but as a legitimate communication method. Lastly, I don't think the police will have time and resources to deal with anything that is not strictly criminal, so people will not see any enforcement,” he said.

According to Segal, curbing hate requires legal and technological changes done in coordination to protect free speech while shutting down antisemitism. Segal explained that local laws about hate and incitement are the first steps to fix this problem since platforms like Telegram have a business model that thrives on viral, often fake content, including antisemitic conspiracies. “In short, they will only act if there is a threatening regulation,” highlighted the CEO of Conntix.

David Strom, a leading technical expert on digital technologies with more than 35 years writing about online communications based in St. Louis, said he also is skeptical that Telegram’s new privacy policies will have the desired effect.

“Telegram better be more reactive, or they will get massive fines. I believe that businesses that rely on Telegram for their communications will switch to strongly encrypted emails and VPNs that don’t share users' data and migrate from Telegram to Signal for messaging, for example,” according to Strom.

Even with newly devised AI tools to provide moderation, Strom is doubtful of their efficiency. “To do content moderation properly, you need human eyes on and a big enough staff to fix the problems of false positive flags.”

With Telegram’s CEO recently saying that his platform only employs “about 30 engineers,” it is clear to experts that antisemitism will continue to flourish there. Considering Telegram’s unwillingness to take efficient measures, it remains in the hands of public authorities to do something. “As long as people don't hear about a person that was thrown into jail due to controversial speech, they will not change their behavior. The police will not act since they don't have the willingness and resources to do so,” Segal said.

Despite many who believe that hateful and violent content is a matter of opinion, Kelley explained that “we’re not dealing with grey areas here, like questioning whether something is hate or not. The new rules are focused specifically on illegal content – things like terrorism and child pornography, which are illegal in most countries. They’re now prohibiting activities like child abuse and selling illicit goods, including weapons. For example, selling guns on Telegram is now explicitly banned, which wasn’t the case before. But even then enforcement is spotty. Telegram has had a rule for years against promoting violence on publicly viewable channels, but ADL found channels sharing footage from the October 7, 2023 attacks in Israel and celebrating it.

As Telegram seems indifferent to the violent and racist content on its platform, the ADL took the issue to other players in the high-tech sector, but the measures can still be avoided. Equally powerful to help curb antisemitism online, Google and Apple have restricted access to specific channels on Telegram. These online forums remained accessible via browsers or other means outside Apple and Google’s ecosystems. Kelley considers these measures the bare minimum and not measures that could be regarded as debatable since they promote explicit violence and hate.

Concerned about how to fight back against hate speech on Telegram, Kelley doesn’t believe it is the right platform to do such work because most radicalized people are unwilling to review their worldview. “They’re unmoveable in that sense,” he said.

Other platforms could be a more efficient way to reach people who aren’t fully committed to extremist positions, according to the expert from the ADL. “TikTok, YouTube or Facebook can be valuable where there’s more room for dialogue,” according to him.

Fully aware that TikTok also has many problems, the ADL shared that TikTok usually addresses complaints when the ADL reports them. Still, when an average user reports them to TikTok, it is much less likely to be addressed.

TikTok has recently updated its efforts to protect users amid the ongoing conflict. The platform has strengthened its Community Guidelines, expanding policies against hate speech to include indirect references to antisemitism, such as the misuse of the term "Zionist."

TikTok reports removing over 4.7 million videos and suspending 300,000 livestreams for violations related to hate speech and misinformation since October 7, 2023. The company has also removed 500 million fake accounts globally, significantly ramping up content moderation with enhanced automated tools and trained moderators. Initiatives like the #SwipeOutHate campaign aim to engage the community in reporting hateful content.

Get the Ynetnews app on your smartphone: